GSoC '20: Kubeflow Customer User Journey Notebooks with Tensorflow 2.x Keras

An important milestone in my journey of open source

Introduction

Open source software development and Google Summer of Code, both started long before the summer of 2020. When the world was starting to grapple with the realities of remote work, open source community was already thriving on it. Over the course of my college years, I have found out three things that I am passionate about - open source, machine learning and SRE. Kubeflow has managed to incorporate all of these into one and doing a project with this organisation has been a dream come true!

Goal

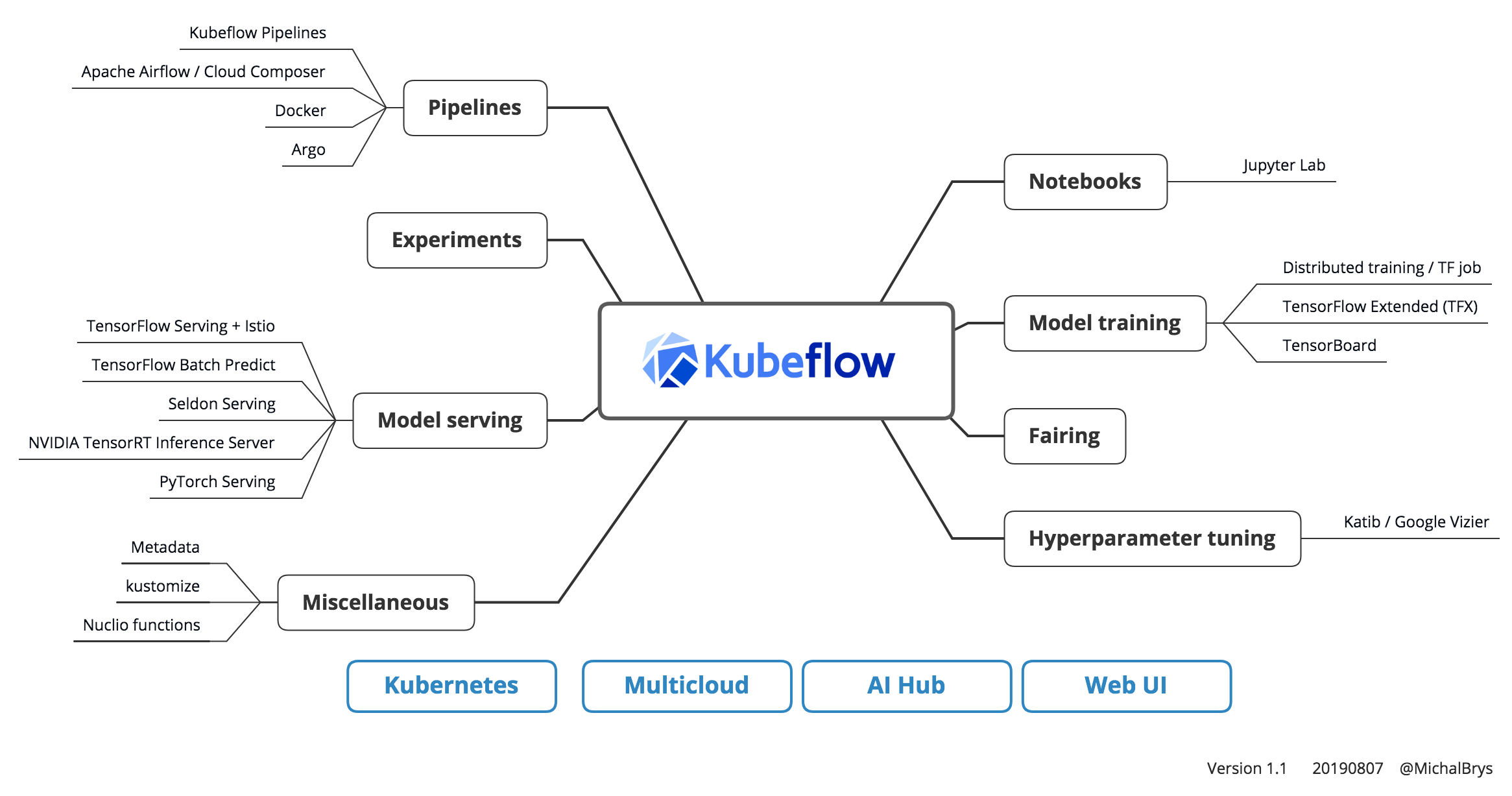

Kubernetes is already an industry-standard in managing cloud resources. Kubeflow is on its path to become an industry standard in managing machine learning workflows on cloud. Examples that illustrate Kubeflow functionalities using latest industry technologies make Kubeflow easier to use and more accessible to all potential users. This project has aimed at building samples for Jupyter notebook to Kubeflow deployment using Tensorflow 2.0 Keras for backend training code, illustrating customer user journey (CUJ) in the process. This project has also served as an hands-on to large scale application of machine learning bringing in the elements of DevOps and SRE and this has kept me motivated throughout the project.

The Kubeflow Community

The Kubeflow community is a highly approachable and closely-knit community that has been reaching out to and helping potential GSoC students well before the application period. Respecting this, I made sure to take feedback for my proposal of the project idea I chose, before the application deadline. Mentors Yuan Tang, Ce Gao and Jack Lin were candid in providing me feedback and I refined and changed my proposal accordingly. To my sweet surprise, I got selected for the idea!😁 What has really helped me in these three months of coding period is that one month of community bonding where I got to know the community and more about the technicalities of Kubeflow.

The Project

Examples created as part of this project needed to be easily reproducible to serve their purpose. Initially the underlying model decided to demonstrate Kubeflow functionalities was a BiDirectional RNN to be trained on IMDB large movie review dataset for sentiment analysis based on a tensorflow tutorial. Over the course of time, we decided to also add another set of examples using a neural machine translation model in its backend trained on a Spanish to English dataset based on another tensorflow tutorial.

The reasons for choosing these models were:

- The kubeflow/examples repo needed more NLP-related tasks.

- These were more of hello world tasks in the field of NLP. So that users who go through these samples need not worry about training code and focus more on Kubeflow’s functionalities.

- These are based on tensorflow tutorials. Kubeflow tutorials based on Tensorflow tutorials show better coupling between the two.

Repository Structure

I created a repo under my own profile to regularly push commits to and my mentors consistently reviewed the work I pushed there. This repo has all of my work with the log history preserved. Each of the two models has the following folder structure explaining core Kubeflow functionalities -

-

<training-model>.py- This is the core training code upon which all subsequent examples showing Kubeflow functionalities are based. Please go through this first to know more about the machine learning task subsequent notebooks will manage. For example check the source code of the model used for the text classification task. -

distributed_<training-model>.py- To truly take advantage of multiple compute nodes, the training code has to be modified to support distributed training. The code in the above mentioned file is modified with Tensorflow’s distributed training strategy and hosted here. -

Dockerfile- This is the dockerfile which is used to build Docker image of the training code. Some Kubeflow functionalities require that a docker image of the training code is built and hosted on a docker container registry. This Docker 101 tutorial is a good starting point to get hands-on training on Docker. For complete starters in the field of containerization, this introduction can serve as a good starting point. The dockerfile used with the source code mentioned above can be found here. -

fairing-with-python-sdk.ipynb- Fairing is a Kubeflow functionality that lets you run model training tasks remotely. This is the Jupyter notebook which deploys a model training task on cloud using Kubeflow Fairing. Fairing does not require you to build a Docker image of the training code first. Hence, its training code resides in the same notebook. To know more about Kubeflow Fairing, please visit Fairing’s official documentation. To get a better idea about Fairing, you can take a look at the text classification Fairing notebook here. -

katib-with-python-sdk.ipynb- Katib is a Kubeflow functionality that lets you perform hyperparameter tuning experiments and reports best set of hyperparameters based on a provided metric. This is the Jupyter notebook which launches Katib hyperparameter tuning experiments using its Python SDK. Katib requires you to build and host a Docker image of your training code in a container registry. For this sample, we have used gcloud builds to build the required Docker image of the training code along with the training data and host it on Google Container Registry. This is the notebook we used to demonstrate Katib for the text classification task. -

tfjob-with-python-sdk.ipynb- TFJobs are used to run distributed training jobs over Kubernetes. With multiple workers, TFJob truly leverage the ability of your code to support distributed training. This Jupyter notebook demonstrates how to use TFJob. The Docker image built from the distributed version of our core training code is used in this notebook. TFJob notebook for the text classification task can be found here -

tekton-pipeline-with-python-sdk.ipynb- Kubeflow Pipeline is a platform that lets you build, manage and deploy end-to-end machine learning workflows. This is a Jupyter notebook which bundles Katib hyperparameter tuning and TFJob distributed training into one Kubeflow pipeline. The pipeline used here uses Tekton in its backend. Tekton is a Kubernetes resource to create efficient continuous integration and delivery (CI/CD) systems. The pipeline notebook for the text classification task can be found at this place.

Merged PRs

In the community bonding period, I opened numerous small issues and PRs solving these issues, as I encountered them when reading documentation or implementing an example. This was done in an effort to know more about the Kubeflow community.

For the main project, I copied these built notebooks and the final work product into a directory created in my fork of the kubeflow/examples repo and created a PR to add these notebooks in Kubeflow’s official repo. The PR got merged and the code currently resides in kubeflow/examples/tensorflow_cuj directory marking the completion of the project.

Special Thanks

Special thanks are due to -